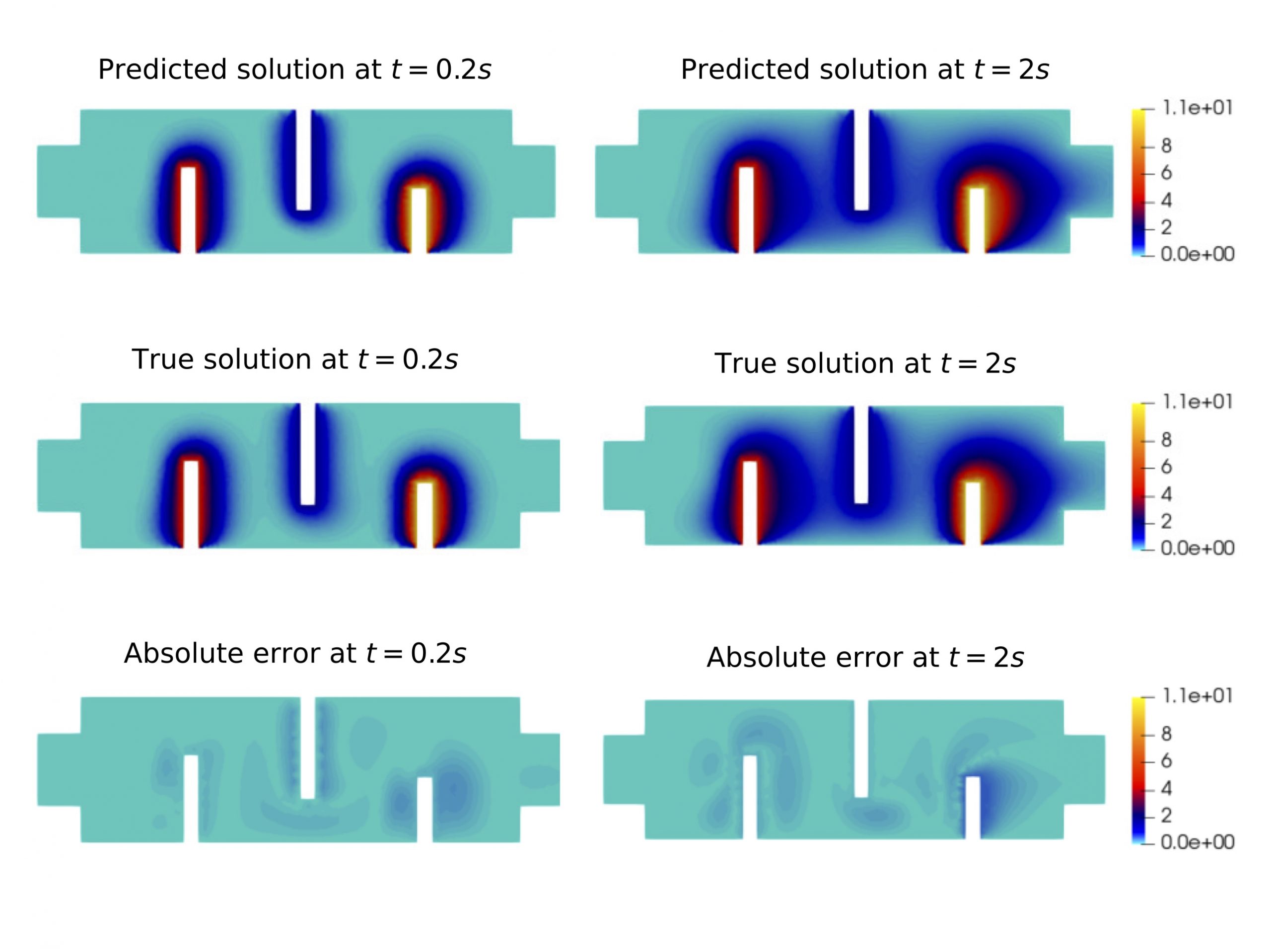

A new MOX Report entitled “Error estimates for POD-DL-ROMs: a deep learning framework for reduced order modeling of nonlinear parametrized PDEs enhanced by proper orthogonal decomposition” by Brivio, S.; Franco, Nicola R.; Fresca, S.; Manzoni, A. has appeared in the MOX Report Collection. Check it out here: https://www.mate.polimi.it/biblioteca/add/qmox/85-2024.pdf Abstract: POD-DL-ROMs have been recently proposed as an extremely versatile strategy to build accurate and reliable reduced order models (ROMs) for nonlinear parametrized partial differential equations, combining (i) a preliminary dimensionality reduction obtained through proper orthogonal decomposition (POD) for the sake of efficiency, (ii) an autoencoder architecture that further reduces the dimensionality of the POD space to a handful of latent coordinates, and (iii) a dense neural network to learn the map that describes the dynamics of the latent coordinates as a function of the input parameters and the time variable. Within this work, we aim at justifying the outstanding approximation capabilities of POD-DL-ROMs by means of a thorough error analysis, showing how the sampling required to generate training data, the dimension of the POD space, and the complexity of the underlying neural networks, impact on the solution accuracy. This decomposition, comb! ined with the constructive nature of the proofs, allows us to formulate practical criteria to control the relative error in the approximation of the solution field of interest, and derive general error estimates. Furthermore, we show that, from a theoretical point of view, POD-DL-ROMs outperform several deep learning-based techniques in terms of model complexity. Finally, we validate our findings by means of suitable numerical experiments, ranging from parameter-dependent operators analytically defined to several parametrized PDEs.

You may also like

A new MOX Report entitled “lymph: discontinuous poLYtopal methods for Multi-PHysics differential problems” by Antonietti, P.F., Bonetti, S., Botti, M., Corti, M., […]

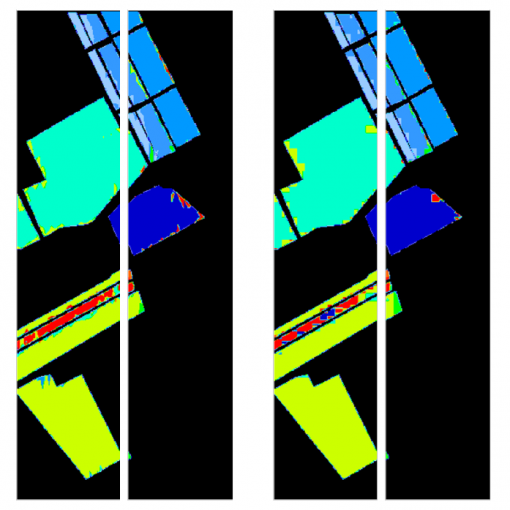

A new MOX Report entitled “A PCA and mesh adaptation-based format for high compression of Earth Observation optical data with applications in […]

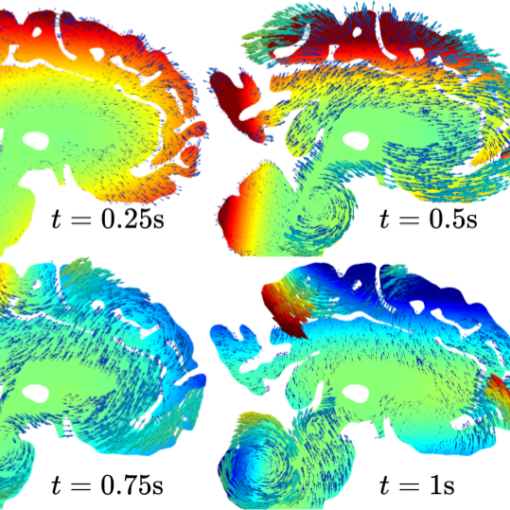

A new MOX Report entitled “Polytopal discontinuous Galerkin discretization of brain multiphysics flow dynamics” by Fumagalli, I.; Corti, M.; Parolini, N.; Antonietti, […]

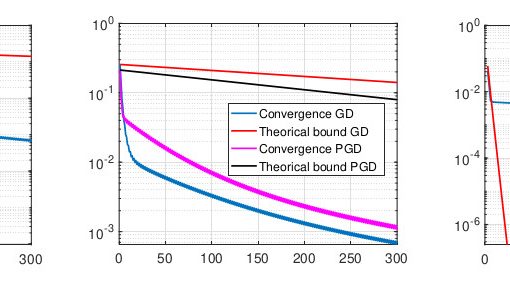

A new MOX Report entitled “Variable reduction as a nonlinear preconditioning approach for optimization problems” by Ciaramella, G.; Vanzan, T. has appeared […]