A new MOX Report entitled “Neural Preconditioning via Krylov Subspace Geometry” by Dimola, N.; Coclite, A.; Zunino, P. has appeared in the MOX Report Collection.

Check it out here: https://www.mate.polimi.it/biblioteca/add/qmox/13-2026.pdf

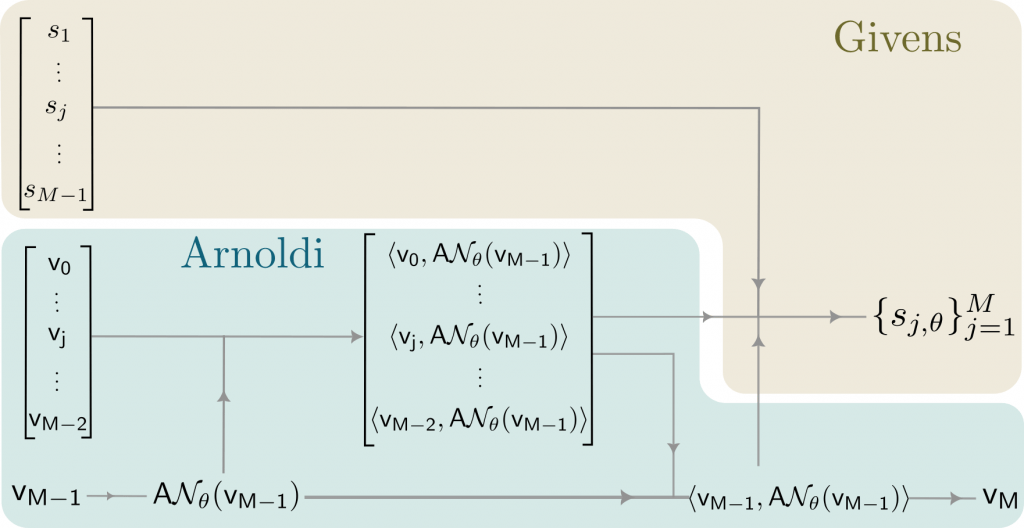

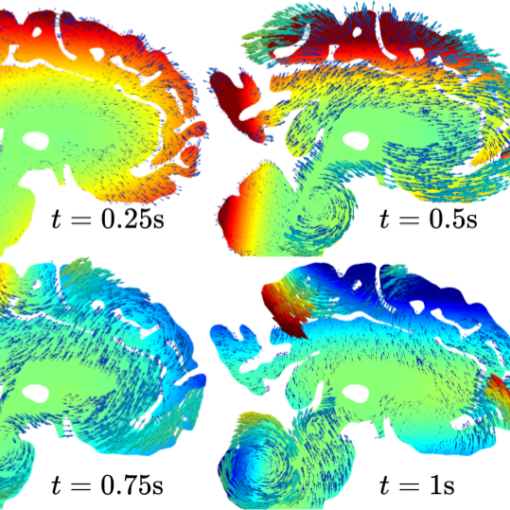

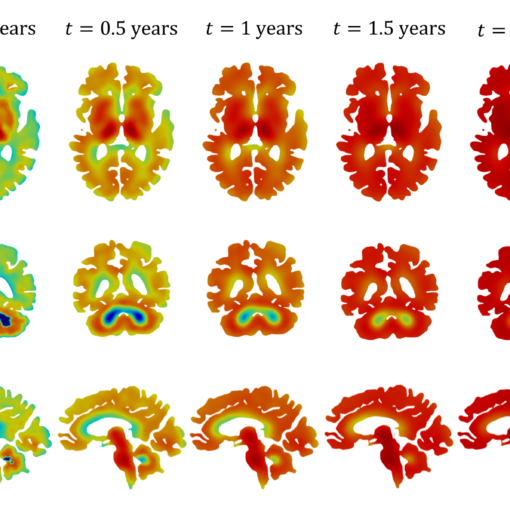

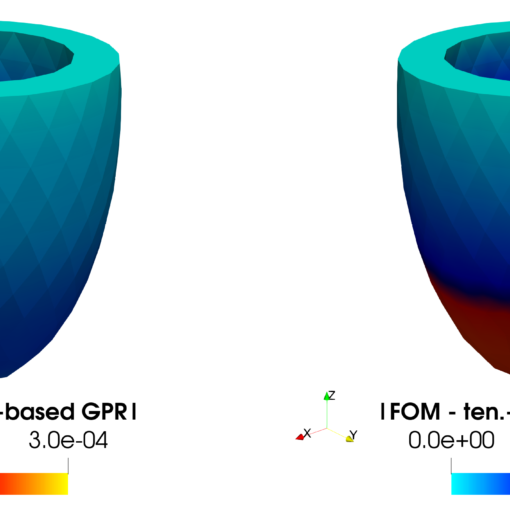

Abstract: We propose a geometry-aware strategy for training neural preconditioners tailored to parametrized linear systems arising from the discretization of mixed-dimensional partial differential equations (PDEs). Such systems are typically ill-conditioned due to embedded lower-dimensional structures and are solved using Krylov subspace methods. Our approach yields an approximation of the inverse operator employing a learning algorithm consisting of a two-stage training framework: an initial static pretraining phase, based on residual minimization, followed by a dynamic fine-tuning phase that incorporates solver convergence dynamics into the training process via a novel loss functional. This dynamic loss is defined by the principal angles between the residuals and the Krylov subspaces. It is evaluated using a differentiable implementation of the Flexible GMRES algorithm, which enables backpropagation through both the Arnoldi process and Givens ro! tations. The resulting neural preconditioner is explicitly optimized to enhance early-stage convergence and reduce iteration counts across a family of 3D–1D mixed-dimensional problems exhibiting geometric variability in the 1D domain. Numerical experiments show that our solver-aligned approach significantly improves convergence rate, robustness, and generalization.